Mastering Browser Cache

Part of the series: Vue.js Performance

Until now, the Vue.js Performance series has focused on the bundle size. While it’s certainly one of the most important aspects of app performance it’s not the only one. To build fast web applications, it's not only crucial to serve the smallest possible assets. It's also important to reuse them whenever possible so users won't wait for something that he/she has already downloaded. If you have read the previous parts of this series you most likely know enough about the bundle size to be confident on that field. This is why in this article I will focus on another technique that could significantly improve your loading times - caching.

Browsers built-in cache

Have you noticed that pages that you’re visiting most often are loading a little bit faster than other ones? This is happening because our browsers are smart enough to cache files that will be potentially useful in the future.

Caching is a process of storing commonly-used data in a quick to access memory so it can be used immediately after its requested. Because of that our browsers can sometimes omit the network and immediately access files that we regularly use from our own machine.

So how fast can the cache be?

The fastest one is the CPU cache.

- L1 cache is responsible for keeping the data that is currently used. Because the space is really small its also fastest to access (~1-2 ns).

- L2 cache keeps the data that will probably be needed in a moment (~4 ns)

In modern CPUs there is also L3 cache which is sometimes used as a memory shared between CPU cores (each core usually have its own L1 and L2 cache)

Apart from the CPU cache there is RAM memory which could be much bigger but its access time is also much higher (on average 100x slower than L1). The slowest memory is the hard drive.

Our browsers can store cache in Memory Cache (persists until the browser is closed) which is stored in RAM and in Disk Cache which persists much longer but is stored in a hard drive that takes much longer to access.

You can see that by yourself. Open the Network tab in your developer tools on any website and refresh it a few times. You will see that static assets like CSS, HTML, JavaScript files and images will be server from Memory Cache.

Now close the browser and open the same page again.

You will see the same asset being served from the disk cache! It will be kept in memory as long as it was specified in cache headers. This is why even after closing the browser the pages that you visit very often are loading faster than others.

Even though we can’t benefit from the fastest CPU cache both available storages are responding much faster than network requests which could take even a few seconds on uncertain network conditions to complete.

Bundling for browser cache

As we’ve just seen we don’t need to do anything to let our users benefit from browser caching (assuming your backend/infra team did a good job with cache headers). It seems like browsers are dealing with everything by themselves pretty well!

There is something we could do though to better utilize its capabilities. If we manage to separate parts of our app that are not changing very often from the ones that change more regularly we will make sure that at least part of our website will be able to benefit from the browser cache.

Imagine a web app that requests the following assets:

app.[hash].jswith a root component and app entry pointcommon.[hash].jswith common dependencies used in every route likevuevue-routervuexetc.home.[hash].jshome page chunk with page-specific dependenciesproduct.[hash].jsproduct page chunk with page-specific dependencies

The part that matters here the most is common.[hash].js file. When it’s being requested and when it’s invalidated?

It turns out that this file is needed on both routes and invalidates only if we add new app-level dependency (hash will change if we modify the file). Because of that it’s much less likely to change and we could reuse it from cache even if routes code change and will have to be downloaded again. By putting common and less prone to changes parts of your app into separate bundles you’re sparing your users a bit of waiting!

You don’t necessarily have to put all root-level dependencies in one file. Sometimes it’s worth having a separate file for each dependency (here you can find a very good analysis of this approach) or specific groups of them. In that case adding new one won’t result in invalidating whole common.js file.

When browser cache is not enough

There is a lot you can do to make your code well-optimized for browser cache and it’s never a wasted effort but browser cache has a lot of limitations.

First issue is its size. Browser cache is rather small so it’s easy to override some of the cached assets with the new ones. That usually happens when we’re browsing a lot of websites.

Another is its uncertain behavior. Each browser engine implements its own caching mechanism slightly different than others. In addition, some of the device settings could affect how it works.

Probably the biggest issue of browser caching is that we as developers need to adjust to its behavior instead of adjusting its behavior. No one knows better how to treat application assets than a developer who created the app. Its us who should be in charge of the caching!

Also we can retrieve files from browser cache only when we have a stable network connectivity. No matter what we have in there if we’re offline we will always see the same picture.

Thankfully not so long ago Google standardized two browser features that could easily solve the above issues - Service Workers and Cache API.

Service Workers and Cache API

On web.dev we can read the following about the Cache API:

"The Cache API is a system for storing and retrieving network requests and their corresponding responses. These might be regular requests and responses created in the course of running your application, or they could be created solely for the purpose of storing data for later use.”

So Cache API is a storage for network responses that we have full control over.

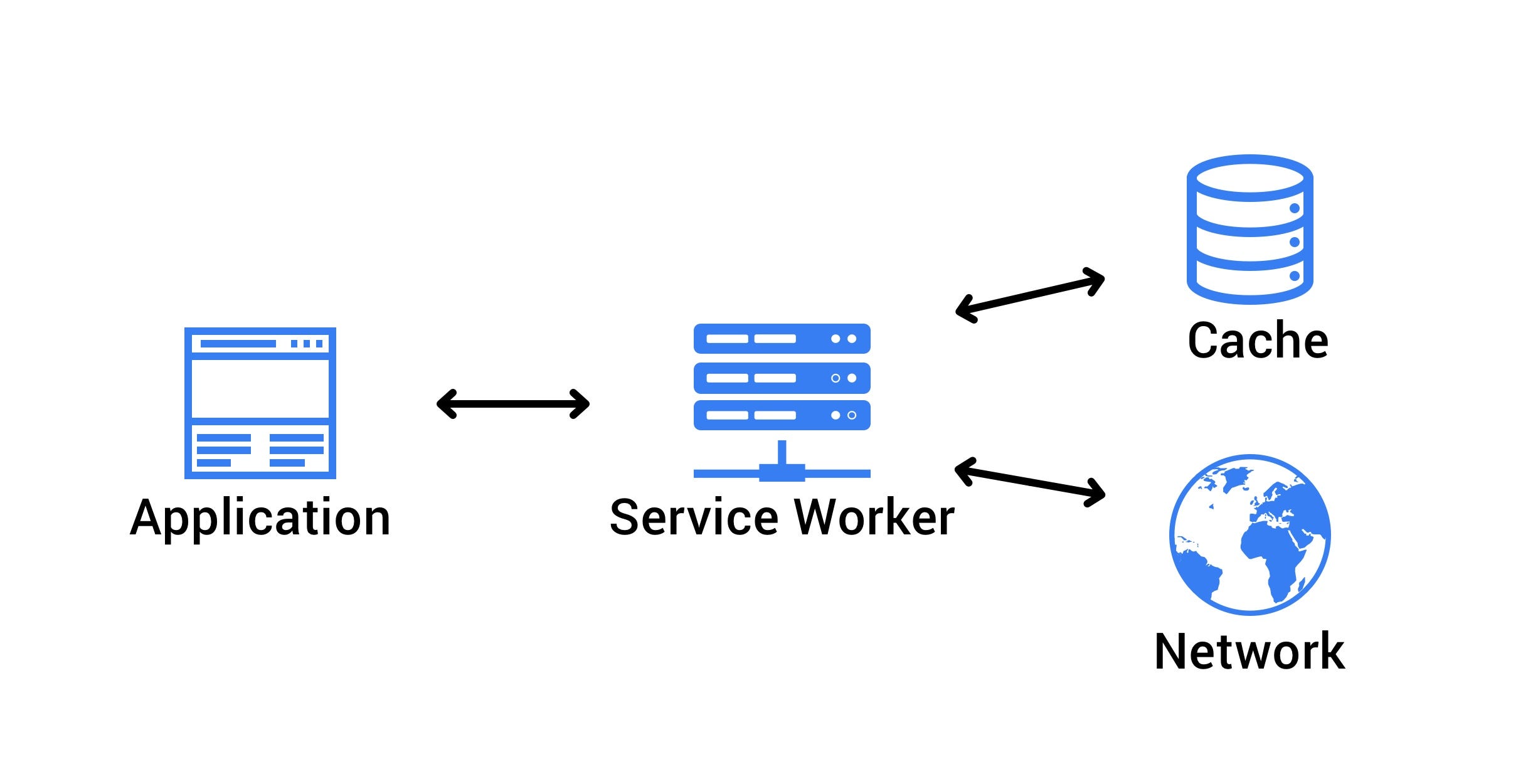

Now what is a Service Worker? You have probably already heard this term. It’s still a hot topic in the web dev community. Service Worker, in short, is a JavaScript code that runs adifferent thread than your app and acts like a Proxy between the browser and the network. It means that we have full control over network requests and responses in our web app! We can change request URL, parameters or even the response. Do you see where it’s going?

With Service Workers and Cache API we can programmatically serve certain files that we have previously puted in the cache as responses to specific network requests. Now we have full control over when and what is cached as well as when we invalidate it. Because Service Workers are acting as a proxy we can even make our application work offline by proxying all network requests to cache storage when connectivity is not stable. How cool is that?!

Moreover we are no longer limited by very tight memory limits so we can cache much more assets!

With so many possibilities you may be wondering what would be the best way to utilize these goods. Fortunately there are already established best practices for this combination.

The App Shell model

App Shell architecture is one of the most efficient ways of building web apps that are loading almost instantly. The App Shell is a minimal set of static assets (CSS. HTML, JS, images) required for your app to work. Such assets should be putted in a cache and served directly from there on another visit.

Now instead of a white screen your users will instantly see the interface of your application while dynamic content will be downloaded in the background. Because we are delivering something to our users immediately the bounce rate of applications utilizing App Shell model is much lower!

This concept is very simple yet really powerful! Thanks to the hard work of Google developers it’s equally simple to implement.

Using Workbox to cache the App Shell

Mastering Service Workers and Cache API could be a time-consuming process but thankfully there is a great library that allows us to benefit from these browser features without learning it’s complicated APIs - Workbox.

By answering a few simple questions on the CLI you can generate a ready to use Service Worker that will cache your App Shell. Lets see how to do this in a fresh Vue CLI project.

First we have to install Workbox CLI:

npm install workbox-cli --globalNext step is to navigate into our apps root dir and set up the wizard which will generate a configuration file for the CLI. We specify things such as production files location, what to cache etc in there.

workbox wizard

Based on provided answers workbox wizard will generate workbox-config.js file with similar content:

module.exports = {

"globDirectory": "dist/",

"globPatterns": [

"**/*.{css,ico,png,html,js}"

],

"swDest": "dist/sw.js"

};Once we have the configuration it’s time to generate the Service Worker itself. We can do this with the following command:

workbox generateSW workbox-config.jsYou will find your Service Worker in dist/sw.js. Now the only thing that has left is registering the Service Worker in our app. We can do this by pasting the following snippet in main.js

// Check that service workers are supported

if ('serviceWorker' in navigator) {

// Use the window load event to keep the page load performant

window.addEventListener('load', () => {

navigator.serviceWorker.register('/sw.js');

});

}That's it! Now when we build our app and run it in production mode after opening the console we should see a message from Workbox telling us that the App Shell has been successfully cached!

If you’re not sure which files are in the cache you can find them under “Application” → “Cache Storage” tab of your Chrome Devtools.

If you turn off your network connectivity and refresh the page you will still see the cached App Shell on your screen. This is a perfect place to start making your app offline-ready.

TIP: You can achieve the same results just by using PWA plugin for Vue CLI and PWA module for Nuxt.js.

Caching dynamic requests

Even though caching the App Shell could drastically improve perceived performance there is a bunch of other, less important assets that can also be cached to improve the loading time of your app. Such assets are usually either less important or change frequently so downloading all of them upfront could be a waste of time and bandwidth. For such requests we could use runtime caching.

As opposed to the previous method where we cache all the vital static assets upfront we are caching only the network responses that has been naturally initialized by our app. For instance when we enter the product page on the eCommerce website we could cache the JSON object that holds the product information, so the next time we visit this particular product we don’t have to wait for it.

To enable runtime caching we have to slightly modify our workbox-config.js and add the following part:

module.exports = {

globDirectory: "dist/",

globPatterns: [

"**/*.{css,ico,html,js}"

],

swDest: "dist/sw.js",

runtimeCaching: [{

urlPattern: /\.(?:png|jpg|jpeg|svg|json)$/,

handler: 'StaleWhileRevalidate'

}]

};Reminder: Don’t forget to rebuild your Service Worker each time you change your config file.

Let’s quickly review what happened here:

runtimeCaching: [{First we have added new property that adds runtime caching capabilities to our Service Worker.

urlPattern: /\.(?:png|jpg|jpeg|svg|json)$/,Then we specified which files we want to cache in a runtime. In that case all the images and JSON responses.

handler: 'StaleWhileRevalidate',This part is very important because it tells Workbox how the cache should work. There are multiple caching strategies and the most popular ones are Cache First (if the asset is in cache serve from there and omit the network), Network First (serve from cache only while offline or network fails) and Stale While Revalidate which we have used here.

How does this strategy work? The below diagram illustrates it best:

The request is going from the page to the Service Worker. If the response is in the cache it is served from there but at the same time a network request is being made and the cache is updated with the newest response. If the asset is not in the cache it’s downloaded from the network and cached.

Using Stale While Revalidate strategy will let your users benefit from responses immediately served from cached while keeping them up-to-date.

Summary

There are many faces of browser cache. One we can use to fulfill even the toughest requirements while the other remains out of our control and the only thing we could do are small adjustments. No matter which you decide to utilize the most in your next project knowing how they work is crucial to building well-performing web applications.

Start learning Vue.js for free

Comments

Latest Vue School Articles

How to Update :root CSS Variable with Javascript

Expand Your Vue.js Skills: Explore Essential Tools and Frameworks

Our goal is to be the number one source of Vue.js knowledge for all skill levels. We offer the knowledge of our industry leaders through awesome video courses for a ridiculously low price.

More than 200.000 users have already joined us. You are welcome too!

© All rights reserved. Made with ❤️ by BitterBrains, Inc.