Nuxt SSR Optimizing Tips

Part of the series: Vue.js Performance

In today's part of Vue Performance series we will focus on the most exciting framework in Vue.js ecosystem - Nuxt! Specifically we will focus on how it’s Server-Side Rendering (SSR) mechanism impacts performance and what we can do to optimize it. Of course all previous tips from this series are still viable in Nuxt!

How server-side rendering works?

To learn how to optimize SSR its crucial to understand how it works and how it differs from client-side rendering.

When we enter a client-side rendered SPA (Single-Page Application) we first see a blank screen with the content of index.html (usually just a <div id="app"></div> in a body). Then JavaScript is starting to execute and creates the UI dynamically and progressively. We can see how the content on our screens is changing until it’s fully loaded.

First we usually see the header, footer and some pieces of the page content. The metric describing the time required for the first bits of content to appear on the screen is called First Contentful Paint - FCP. Other pieces of the UI are appearing until the app is visually complete and fully functional. The metric that measures the time required for the app to become fully interactive is called Time To Interactive - TTI.

Side note: If you want to learn more about metrics measuring web performance check out this great article by Artem Denysov

In Server-Side rendered apps we don’t see this progression. This is how they load:

- First the JavaScript code is being executed on the server and Vue generates the static HTML file that contains full markup of your application

- This static HTML file is sent to the browser. The metric describing the time that is required for the user to receive it is called Time to First Byte (TTFB)

- Once its downloaded the user almost immediately sees a full page (but it’s not yet interactive!)

- JavaScript is being executed on the client side, takes over the control of static HTML and makes it interactive. This process is called

hydration. You can think about this as providing static (dehydrated) HTML with Vue interactivity (water) - Hydrated app becomes interactive (TTI).

It’s important to mention that the server-side rendering process happens only when we directly enter the website (or refresh it). Once hydrated, the app behaves like a normal client-side rendered SPA.

Which metric should we focus on?

Knowing how Server-Side Rendering works we can conclude that there are two key metrics we have to optimize our application for to achieve great performance in this field:

- Time to First Byte (TTFB) - . In other words the time until rendered static HTML arrives to the user’s browser. It’s crucial to keep this metric as low as possible (ideally around 1s on the average network) because, in opposite to progressively loaded client-side rendered apps user won’t see anything until the full page is being downloaded

- Time to Interactive (TTI) - Even if our server-side rendered page could be quickly delivered to the user it’s equally crucial to make it interactive as soon as possible. Otherwise our users could get frustrated with dynamic elements not working as intended.

Side note: Please keep in mind that our application behaves like a normal SPA while we’re doing in-app navigation so we still have to take care of the bundle size of other assets and runtime performance!

Optimizing Time to First Byte

We could split total Time to First Byte in into 2 phases:

- Execution phase when the server-side code is being executed and static HTML file is generated

- "Downloading" phase while the generated HTML file is being downloaded to the users browser

We already know how to optimize the execution phase from the previous articles. Most of the known client-side optimization techniques will influence this metric positively.

The tricky part is the downloading phase. It strictly correlates to the size of the outputted HTML file and this could easily get out of our control. To understand why, we have to learn how Nuxt is conveying data that was fetched on the server side to the client side and how it affects the size of server-side generated HTML.

Conveying server-side data

I wrote that the same code is executed on the server and client side. It implies that also all asynchronous calls are made on both sides but this is not entirely true. If we write our code as-it-is this is what happens but that would be a huge waste of time and bandwidth. If we’re already fetching the data on the server side to generate the static HTML whats the point of doing this again on the client side?

Nuxt core team is very aware of that issues and this is why they introduced fetch and asyncData that are used to convey server-side data to the client side.

Take a look at this example from the Nuxt docs:

<template>

<div>

<h1>Blog posts</h1>

<p v-if="$fetchState.pending">Fetching posts...</p>

<p v-else-if="$fetchState.error">

Error while fetching posts: {{ $fetchState.error.message }}

</p>

<ul v-else>

<li v-for="post of posts" :key="post.id">

<n-link :to="/posts/${post.id}">{{ post.title }}</n-link>

</li>

</ul>

</div>

</template>

<script>

export default {

data() {

return {

posts: []

}

},

async fetch() {

this.posts = await this.$http.$get(

'https://jsonplaceholder.typicode.com/posts'

)

}

}

</script>Wen you enter the page directly from the URL (so we make use of SSR) you won’t see the additional request to https://jsonplaceholder.typicode.com/posts in your network tab. This is because everything that was fetched in fetch on the server is available on the client side without additional network calls.

Okay, we know what happens but we don’t know HOW it happens. This data is not magically appearing on the client. There has to be a way of conveying it!

The only thing that we’re getting from the server is this huge index.html file so the data has to be there! If you check the source of this file you’ll notice a <script> tag at the bottom that adds a __NUXT__ object to window. This is where the server-side data is! Nuxt is picking up the new state from there automatically.

<script>window.__NUXT__={layout:"default",data:[{},{posts:[{userId:1,id:1,title:"sunt aut facere repellat provident occaecati excepturi optio reprehenderit",body:"quia et suscipit\nsuscipit recusandae consequuntur expedita et cum\nreprehenderit molestiae ut ut quas totam\nnostrum rerum est autem sunt rem eveniet architecto"},{userId:1,id:2,title:"qui est esse",body:"est rerum tempore vitae\nsequi sint nihil reprehenderit dolor beatae ea dolores neque\nfugiat blanditiis voluptate porro vel nihil molestiae ut reiciendis\nqui aperiam non debitis possimus qui neque nisi nulla"},{userId:1,id:3,title:"ea molestias quasi exercitationem repellat qui ipsa sit aut",body:"et iusto sed quo iure\nvoluptatem occaecati omnis eligendi aut [...]"}]}],error:null,serverRendered:!0}</script>Even though this powerful tool can save us time on fetching the content on the client side it could also significantly increase the size of index.html. The more data we decide to convey the bigger it become and the longer the user will have to wait to see any content. This is why we have to be very careful with the data that we’re sending to the client side!

There is literally one thing we can do to avoid performance issues in that field - don’t send data that we don’t have to. Sounds obvious but what kind of data it is exactly?

I’d say that everything we need to display SEO-critical parts of our website such as navigation or main page content should always be conveyed to the frontend side.

We don’t have to convey content that is not important to crawlers like data that is available only for logged-in users or any other personalized content (like shopping carts)and pop-ups/ off-screen sidebars content

Additionally we should make the objects that has to be sent to the client as small as possible:

- If you’re using GraphQL you should always limit the fields in the query that to the ones that are actually used

- If you’re not using GraphQL try to limit the fields you’re sending to the necessary ones by removing them from the object you’re saving to the component state. For example in the below code we only need

idandtitleproperties frompostsso we can strip other ones

<script>

export default {

data() {

return {

posts: []

}

},

async fetch() {

this.posts = await this.$http

.$get('https://jsonplaceholder.typicode.com/posts')

.then(posts =>

posts.map(posts => ({

title: posts.title,

id: posts.id

}))

);

}

</script>Now when we know how to optimize the TTI lets see what we can do to make our app interactive faster.

Important side note: Please keep in mind that limiting the conveyed data is also a tradeoff! You’re getting better performance but if the JS execution fails (and therefore hydration) your app could become unusable if it miss some crucial data. If you want to make your app work even when JavaScript fails you have to make sure that you’re conveying everything that is required for users navigation. You also have to get rid of dynamic UI elements like off-screen sidebars and pop-ups because they won’t work without JavaScript.

Optimizing Time to Interactive

Overall amount of JS code in our app and number of components that has to be hydrated are two key factors that are affecting the Time to Interactive metric. We already know effective code-splitting techniques from previous parts of this series that can minimize the amount of JavaScript in the critical path but is there something we can do to minimize the amount of hydrated components?

Fortunately, thanks to the amazing vue-lazy-hydration library made by Markus Oberlehner we can do a lot!

As we can read in the libraries README:

"vue-lazy-hydration is a renderless Vue.js component to improve Estimated Input Latency and Time to Interactive of server-side rendered Vue.js applications.”

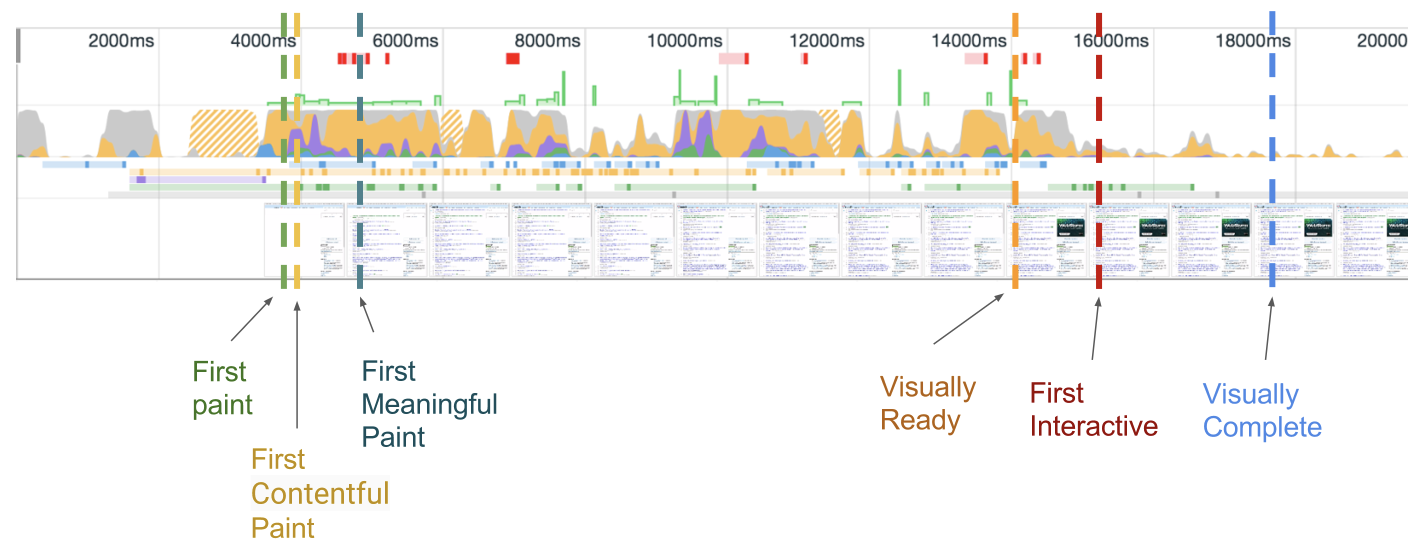

Exactly what we’re looking for! The README provides us with an example showing what results we can expect:

These are the results of the test project without lazy hydration:

And these - with lazy hydration applied:

As we can see TTI in above example was 25% smaller with vue-lazy-hydrate (of course your results can be completely different)! You will see in a minute that it’s extremely easy to use this library which makes it a great tool to achieve quick and significant performance improvements.

To install the library simply add it through npm/yarn registry to your project:

npm install vue-lazy-hydration --saveNow we will have access to LazyHydrate component that we can use to wrap other components and delay their hydration.

For example this is how we can delay component hydration to the moment until it’s visible on the screen:

<template>

<div>

<LazyHydrate when-visible>

<AdSlider/>

</LazyHydrate>

</div>

</template>

<script>

import LazyHydrate from 'vue-lazy-hydration';

export default {

components: {

LazyHydrate,

AdSlider: () => import('./AdSlider.vue'),

},

// ...

};

</script>TIP: The library is allowing you to hydrate components under other conditions (like on-interaction) as well. You can check out the available options in the README.

And thats it! Usage of this library is ridiculously simple. Now let’s see when we could make use of it.

We can divide our components into three groups:

Components that has to be hydrated immediately

Usually these are the components that are immediately visible on the screen (aka above the fold). We can’t do much about them. They just have to be hydrated.

Components that can be hydrated later

In most cases these are either off-screen components or below the fold components.

Hydrating them when they’re becoming visible is in most cases the right strategy to choose

<LazyHydrate when-visible>

<LazyHydratedComponent/>

</LazyHydrate>Components that doesn’t have to be hydrated at all

Yes! There are such components. We often have components that only display some text but are not interactive in any way. Such components, once rendered on the server could remain static and doesn’t have to be hydrated at all.

We can achieve that with ssr-only prop on LazyHydrate component

<LazyHydrate ssr-only>

<ArticleContent />

</LazyHydrate>Summary

Optimizing Nuxt Performance is not that much different from optimizing any other Vuejs application. The client-side part is working as a regular Vue app but on the initial visit the page is being rendered on the server-side therefore users see a blank screen until the app is loaded. This is why it’s crucial to deliver this initial content as soon as possible. If we know the areas of potential performance bottlenecks it should be a piece of cake!

Start learning Vue.js for free

Latest Vue School Articles

Vue.js and HTML Injection Explained

Tightly Coupled Components Vue Components with Provide/Inject

Our goal is to be the number one source of Vue.js knowledge for all skill levels. We offer the knowledge of our industry leaders through awesome video courses for a ridiculously low price.

More than 120.000 users have already joined us. You are welcome too!

© All rights reserved. Made with ❤️ by BitterBrains, Inc.